Silicon Valley Chasing the Wrong Problems (This Week in Life With Machines)

Plus Losing Creativity, Fake People, and a Mindful Practice

Hi you,

TL;DR: A slower digest: (1) real innovation often lives outside Silicon Valley, (2) we surrendered “authenticity” before AIs arrived—time to reclaim it, and (3) a simple practice to scroll without losing your mind. Plus a tiny palate cleanser.

This week’s Life With Machines Digest is a little different.

We’re turning inward. Instead of a bunch of new things to know or do, we’ve got a handful of ideas to digest. To sit with. To question. To feel.

The AI news cycle, like all news cycles now, hits full blast. There’s literally no way to keep up with it all, so let’s not try. Instead we’re going for more deliberate. We’ve learned that without space to process, we risk becoming passive consumers of stories rather than active participants in meaning-making. So this week: less overwhelm, more orientation.

This is a digest to think about. With our human minds.

Let’s begin.

🧠 Things You Should THINK About

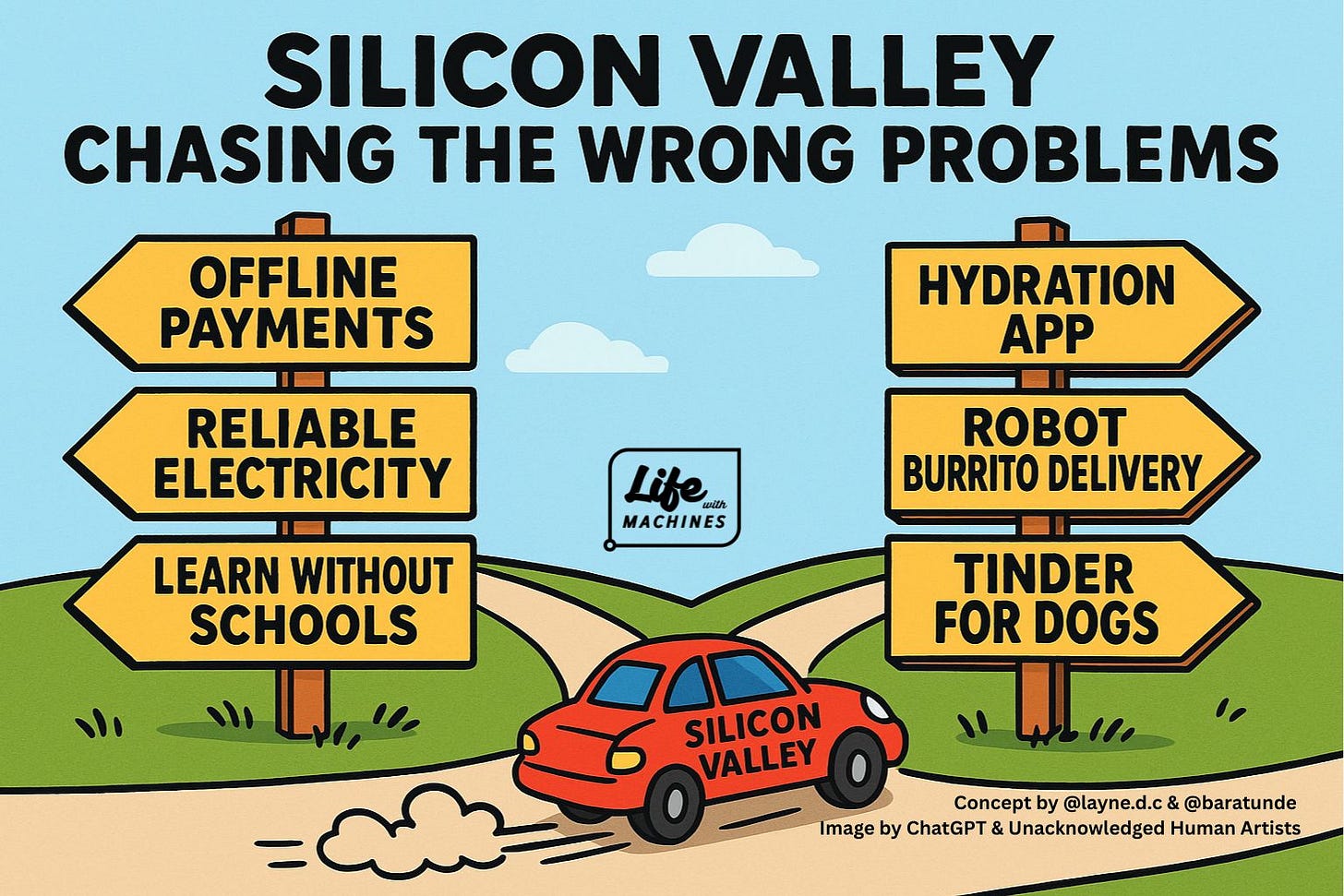

Silicon Valley Is Solving the Wrong Problems. Real Innovation Is Happening in Africa

From: Chika Uwazie (Instagram)

Entrepreneur and Afropolitan podcast host Chika Uwazie shares a story that says a lot with very little: a Silicon Valley VC passed on a Nigerian offline payments app—vital where connectivity is spotty—while funding an app that reminds you to drink water.

She writes:

“Silicon Valley’s problem isn’t talent. It’s perspective.”

“True innovation isn’t making easy lives easier. It’s making impossible lives possible. That’s what builders across Africa are already doing without Silicon Valley’s blessing, capital, or permission.”

Why We Care:

I have long ranted against the types of problems excessively self-loving Silicon Valley types celebrate solving: get me my burrito faster; remove this “friction” aka human contact from my “user experience” aka life; and improve my lifestyle from that of a noble to that of a royal. We've applied the most advanced computing and mathematical resources to optimize…. (checks notes)… advertising delivery. Meanwhile the planet is trying to shake us off like a dog with fleas (spoiler: we’re the fleas), and literal Nazis are making a comeback.

We have real problems worthy of real solutions, including technical ones. But if decision-makers aren’t close to those problems, they won’t fund the right fixes. That’s part of why so much generative AI feels meh: infinite profit is chasing shallow use cases.

This story made me think of two people immediately. The first, Shaka Senghor, a friend I made at the MIT Media Lab after he’d served 19 years in prison. I actually interviewed him for my old Fast Company column about how the innovation in a prison yard far exceeds anything from an Ivy League kid in Palo Alto. Check out Shaka’s new book, How To Be Free, btw. It’s dope.

The second is my friend Derrick Ashong, whom I met in college and included in my memoir How To Be Black. His diasporic perspective (born in Ghana, raised in Saudi and Jersey) is one I’ve always found clarifying and valuable. Plus I remember when his own startup idea was met with derision by the gatekeepers of the Bay Area, forcing him to seek funding and talent far beyond Silicon Valley and even the United States. He’s now the head of a company with major business partnerships across the planet, and I asked him for his own brief perspective on Chika’s IG carousel. Here’s what he WhatsApp’d me back:

"Like a poorly trained AI, a VC model built on pattern matching can't recognize a solution to a problem it's never experienced. This doesn't make the problems any less real, nor the solutions any less viable."

- Derrick Ashong, Founder/CEO, Take Back the Media

We Chose to Destroy Human ‘Authenticity’ Long Before AI Showed Up

From: Carlo Iacono (Substack)

This one might sting.

In a sharp and scathing essay, Carlo Iacono argues that AI isn’t stealing our authenticity. We surrendered it willingly — when we started optimizing our lives to increase productivity and reformatting our thoughts into threads to post them on platforms.

Some standout lines:

“The great irony of 2025 isn't that machines learned to think. It's that we finally admitted we'd forgotten how.”

“It’s just the latest chapter in a story that started when we decided creativity was content and art was intellectual property.”

“The difference between you and an AI isn't that you're original and it's derivative. The difference is that you're slow and inefficient at being derivative.”“The machines aren't coming for your humanity. They're showing you where you abandoned it.”

Why We Care:

I’ve repeatedly warned that the greatest risk of automation wasn’t job loss, it was humans becoming machines, and we’ve already done that by optimizing ourselves based on algorithmic rewards.

Check out THIS moment from our episode with the Rahaf Harfoush (00:41:26)

I’d argue further that the roots of this are not merely in the current technology era but emerge deeply from our practice of capitalism. Once humans started seeing our value as our economic output (and not familial relationship, spiritual essence, or membership in the community of life), we greatly restricted our fate. Output maximization is a machine’s reason for being. Humans (should) have a higher calling.

Cut to today. AI is not to blame for the loss of human creativity. That’s where I align with Carlo’s essay which I see as a prompt to humanity and a general call to originality.

What do you think?

What are some ways we can reclaim what makes us deeply, beautifully human?

Democracies Should Ban “Counterfeit Humans”

From: Yuval Noah Harari (NY Times)

Harari warns that AIs can mass-produce intimacy—not just misinformation—and that this may be the deeper democratic threat. Some choice quotes:

“In a political battle for minds and hearts, intimacy is a powerful weapon. An intimate friend can sway our opinions in a way that mass media cannot. Chatbots … are gaining the rather paradoxical ability to mass-produce intimate relationships with millions of people. What might happen to human society and human psychology as algorithms fight algorithms in a battle to fake intimate relationships with us, which can then be used to persuade us to vote for politicians, buy products or adopt certain beliefs?”

“democracies should protect themselves by banning counterfeit humans…If tech giants and libertarians complain that such measures violate freedom of speech, they should be reminded that freedom of speech is a human right that should be reserved for humans, not bots.”

Why We Care:

When I read the piece, I immediately found some issues with Harari’s framing right off the bat—that the only pre-modern democracies were Rome and Athens and anything big collapses into authoritarianism. That’s a pretty binary and disprovable take if you look beyond Europe for precedent. However, the point he’s making about allowing AIs to influence us through our relationships with them is an important one. By mass producing intimate relationships, we are also guaranteeing the mass destruction of trust.

We already know bots have compromised our ability to trust what we see online. Sockpuppet accounts flood social media. Fake people push real narratives, and real people push fake narratives. Meanwhile, we waste time debating nonsense because the signal is buried in profit-seeking noise. Now imagine those bots not just manipulating our friends but becoming them. And our advisors. And our emotional support systems. In a world where those bots don’t truly answer to us but to centralized, profit-motivated corporate entities with massive incentives to capture our attention, we will have invited foxes into every corner of the hen house.

If we allow AIs to masquerade as humans en masse — especially in vulnerable, relational contexts — we might irreversibly fracture our collective ability to discern what’s real. As much as I value the perspectives offered by AI systems in all sorts of contexts from medical to business strategy and creative, I increasingly believing we should draw stylistic lines between the appearance and behavior of such systems and IRL human beings. Let bots be bots and humans be humans. They don’t have to be us to be of value to us.

🌀 Something To TRY

How To Look at Social Media — Without Losing Your Mind

From: The Etymology Nerd (Substack)

Adam Aleksic offers a simple but powerful idea: the way you look at social media shapes how it shapes you. Most of the time, we scroll in a kind of autopilot mode — absorbed, entertained, but not really noticing what’s happening to us. That’s when the algorithms have the most influence.

His suggestion isn’t to stop scrolling, but to practice breaking that spell in small ways. Here’s a quick exercise you can try in under two minutes:

Scroll as usual. Let yourself get pulled in.

Then pull back. Instead of focusing on the video or post, look at the feed itself. How often are you being shown ads? What patterns repeat?

Notice the breaks. When you pause — to save a video, click a profile, or hit the end of a thread — ask: Why am I here? How did I get here?

Re-enter with awareness. Keep scrolling if you want, but now you know what forces are at play.

Do this once in a while and you start training your attention. You still get to enjoy the fun parts of social media, but you also build a muscle of noticing — which makes it harder for the platform to steer you without your consent.

Why We Care:

This reminds me of a meditation retreat I took many years ago at the Kripalu Center in Massachusetts. We did a walking meditation, which sounded nonsensical, even dangerous at the time. But what I recall most was that it was about noticing, tuning in deeply to all your senses as you move through the world, including internal responses to the external environment. Hear the bird, feel the breeze, notice your internal reaction. Move deliberately. The goal wasn't to catalog everything but just to be actively aware.

I think the author's advice in this piece aligns well with that and can be helpful in interactions with AI systems, especially chatbots. Notice not just what they tell you, but what they’re nudging you toward. Ask whether that nudge is what you actually want or need. That pause — that moment of awareness — is where our cognitive agency lives.

😂 Palette Cleanser

From the @techroastshow — ChatGPT… saying goodbye to ChatGPT:

🎬 on Instagram

Enjoy a surprisingly emotional goodbye between a chatbot and itself. Because of course.

If you made it this far, thank you.

If you felt anything along the way, even better.

Your turn—drop a comment:

What’s one real problem you wish top AI talent would tackle?

Where have you felt yourself optimize for the algorithm—and how might you undo that this week?

Until next time,

Don’t forget to pause.

(And hydrate. With or without an app.)

— Baratunde

Thanks so much for this article. Working in the corporate world, I’m seeing the push and focus on using AI to “help” us become more efficient and save time so we do even more. It’s all about the productivity factor - how can we do more in less time to improve profits? What I love about the examples and insights you shared is the possibility of applying AI technology in ways that can actually help humanity be more human. This gives me a lot to think about and hope for!

Create more than we consume.