Hi you,

This week, we’re riding the wild AI rollercoaster from subway ads that redefine “friendship” to California’s first real regulation of the tech that’s shaping our lives. We’ve got some grief, some hope, and some very weird Sora-powered satire to cap it all off. I’m also off to San Francisco this week, the epicenter of humanless tech, to try to speak some good truths into the ether and the servers. I’ll be speaking at the Fund.AI gathering of philanthropists trying to use AI for good as well as the Masters of Scale Summit where I’ll try to lean hard away from words like “master” and “scale.” It’s actually one of the good tech/business gatherings though. Holler if you’ll be there!

And one more plug for you voting for us… If you’ve ever laughed, nodded, shouted “exactly!”, or used something you learned here in a conversation, we’d love your support!

🏆Reminder: The Signal Awards just named Life With Machines a Finalist in four categories — and now, you can help us win the Listener’s Choice Award! Vote closes Oct. 9th.

👉 Vote for us here. Use the search function in the upper right corner to search for “life with machines” then click in each hyperlinked category to choose us (or someone else great). You’ll need to make an account because bots have ruined everything.

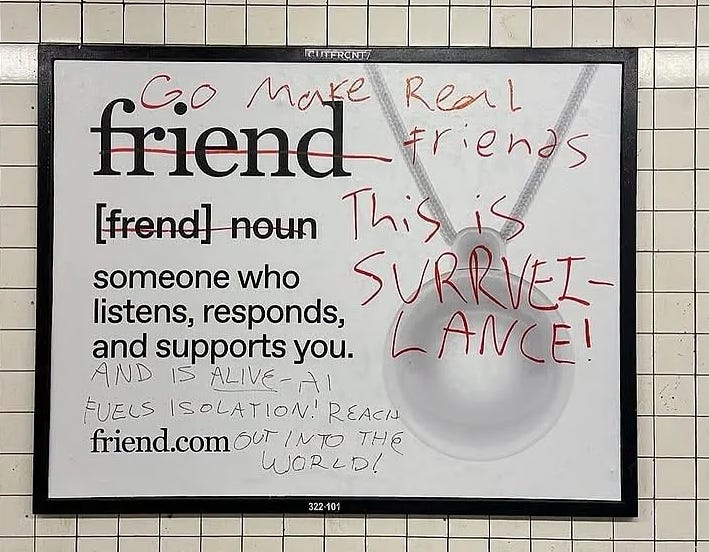

AI “Friend” Ads in NYC Get Vandalized

From: Business Insider

Why We Care:

These vague ads are popping up everywhere in New York and LA trying to sell friendship but when you read the fine print, they’re offering to hang a camera and listening device around your neck that is constantly on. They should have built it on the domain stalker.com instead of friend.com. It attaches to an app on your phone so that your phone demands even more of your attention. And you, the user, are responsible for breaking privacy laws if you illegally surveil someone depending on what city or state you are in. All in the name of selling you constant companionship. Not our definition of “friend” but not surprising since Lord Zuckerberg long ago destroyed the meaning of that word.

Read our post from earlier this year about this trend of digital colonization. This is part of the long-running trend. Lure humans online. Undermine the true meaning of “friend,” then algorithmically divide actual friends so people feel alone, then make fake digital friends to fill the mysterious “loneliness gap” that’s emerged, ultimatingly creating a world in which few humans are actually needed. Fake friends. Fake media. Fake articles. Real consequences. Turn everything into a monetizable digital representation. Sell it for real money. It’s happening, and we must resist it and create alternatives at every turn.

From our Life With Machines Slack, some reactions:

Roya: “Lowkey how do you expect people to not hate AI when this is what you’re spending money to use it for?”

Peter: “New definition of Friend? Feigning Relationships Inside Emotional Neediness Devices.”

Layne: “The user is SOLELY RESPONSIBLE for adhering the their regional privacy laws and waives all right to either jury trials or class action lawsuits This is so dark. Can the police subpoena your “friend” and use their recorded data against whomever you may have interacted with?? I hate it.”

📰 More Things You Should Know

California Regulates AI (A Little)

From: The Verge

“Senate Bill 53, the landmark AI transparency bill that has divided AI companies and made headlines for months, is now officially law in California.”

Here’s how AI explained the new law when asked to break it down like we’re in high school:

Transparency

Big AI companies have to explain how they’re being responsible.

They must post a public document on their website showing how they’re following safety rules, national standards, and best practices.

Innovation

California will build a public supercomputer (through a new consortium) that researchers, startups, and maybe smaller companies can use.

The idea is to give more people access to computing power, not just the giants like OpenAI or Google.

Safety

If something goes wrong with a powerful AI system (say it causes major harm or risk), companies and the public can report it to California’s emergency services office.

This makes AI safety incidents a bit like reporting wildfires or earthquakes — the state wants an early-warning system.

Accountability

Whistleblowers (employees who speak out about risks) are protected if they reveal dangers about AI systems.

Companies that break the rules can face fines from the Attorney General.

Responsiveness

The law isn’t frozen in time. Each year, California’s tech department will update the rules based on new AI developments and global standards, with input from experts.

Why We Care:

We don’t want to die.

We don’t want to be ruled by machines or by the handful of people building them. So, we have to pay attention and understand the steps being taken by those we elect.

The federal government has taken a purposeful position of not protecting us in any way (or actively attacking us. see: any Democratic-led city under siege). So for now, it’s up to the states (though the feds may make that impossible, too).

Silicon Valley is in CA. So CA better have something to say about this.

Also, mild yay for some steps toward collective self-governance around these technologies.

OpenAI Responds to Teen Suicide with New Safety Features

From: Open AI Sam Altman’s Statement , New “Age Prediction” Tools

In the wake of a teen’s suicide tied to interactions with ChatGPT, OpenAI released a public response and new features to detect user age, increase safety controls, and limit mature content.

Why We Care:

These companies are experimenting on our children. That’s the headline and simple truth. Altman’s statement invokes ideas of “freedom” but it’s a narrow interpretation: the freedom of a user to engage with his profit-driven product. There’s also a version of freedom that includes responsibility to others. In a parallel universe he’s articulating the freedom of parents, teachers, and adults to not be forced to learn a new, unstable, highly emotionally addictive platform auto-delivered into the personal devices of the young people in their lives.

But we live in this world, where his statement reads a lot like something ChatGPT would author.

Delta walks back personalized AI fares under political pressure—but expands AI revenue tools

From: Reuters and Business Insider (pay walled)

After scrutiny from the U.S. Senators, Delta said it won’t set prices based on individuals’ “willingness to pay,” even as it rolls out AI-based revenue management with partner Fetcherr to roughly 20% of its domestic network by year-end. Lawmakers warned of fairness and privacy risks in algorithmic pricing.

Why We Care:

Travel is the test bed for algorithmic price discrimination. This is a power question: who benefits from AI optimization, platforms or people? Will their be limits to differential pricing, or will we “let algorithms be algorithms” and use “increasing shareholder value” as the blanket immunity for otherwise illegal behavior?

😂 Palate Cleanser

One of the most popular videos made using Sora 2 from OpenAI is this deepfake of Sam Altman stealing GPUs from Target:

It’s absurd. It’s very online. And weirdly cathartic. I got access to Sora 2 and have been be playing with it. You can see all my public creations. We decided to post this one to our Life With Machines IG account.

Anything you want me to try to make? Let us know.

Later this week I’ll share my deeper perspective on this tool.

💬 Your Turn

If you’ve got thoughts on this week’s stories — California’s law, OpenAI’s response, AI “friends,” or what you think responsibility should look like in a machine-augmented world — we want to hear from you.

Until next time. Read the fine print, call your real friends, and yeah… please vote for us 🙏

🗳️ VOTE in the Signal Awards — before Oct. 9

— Baratunde 🔮

Thanks to Associate Producer Layne Deyling Cherland for editorial and production support and to my executive assistant Mae Abellanosa.